Here is an example of how to create a model for instance segmentation:

def get_instance_segmentation_model(hidden_layer_size, box_score_thresh=0.5):

# our dataset has two classes only - background and dolphin

num_classes = 2

# load an instance segmentation model pre-trained on COCO

model = torchvision.models.detection.maskrcnn_resnet50_fpn(

pretrained=True,

box_score_thresh=box_score_thresh,

)

# get the number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

# now get the number of input features for the mask classifier

in_features_mask = model.roi_heads.mask_predictor.conv5_mask.in_channels

model.roi_heads.mask_predictor = MaskRCNNPredictor(

in_channels=in_features_mask,

dim_reduced=hidden_layer_size,

num_classes=num_classes

)

return model

# get the model using our helper function

model = get_instance_segmentation_model(hidden_layer_size=256)

# move model to the right device

model.to(device)

# construct an optimizer

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005, momentum=0.9, weight_decay=0.0005)

# and a learning rate scheduler which decreases the learning rate by

# 10x every 3 epochs

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)

For training the model, you can use train_one_epoch as follows:

This is how to use the training function in training loop:

if Path("saved_models").exists():

saved_model_path = Path("./saved_models/model.pt")

else:

saved_model_path = Path("./notebooks/saved_models/model.pt")

if saved_model_path.exists():

num_epochs=1

else:

num_epochs = 20

data_loader, data_loader_test = get_dataset("segmentation", batch_size=4, get_tensor_transforms=get_my_tensor_transforms)

for epoch in range(num_epochs):

# train for one epoch, printing every 20 iterations

train_one_epoch(model, optimizer, data_loader, device, epoch, print_freq=20)

# update the learning rate

lr_scheduler.step()

Show predictions on a single input image:

# pick one image from the test set

img, _ = data_loader_test.dataset[0]

show_prediction(model, img)

We can also show predictions for the whole or for a subset of the dataset from a dataloader object:

Shows predictions for the first two elements in the data loader:

show_predictions(model, data_loader=data_loader_test, n=2, score_threshold=0.5)

_, masks = get_true_and_predicted_masks(model, data_loader_test.dataset[0], 0.5)

img, _ = data_loader_test.dataset[0]

print(f'We have {masks["true"].shape[0]} dolphins on the photo, total of {masks["predicted"].shape[0]} are predicted with score higher than 0.5')

assert len(masks["true"].shape) == 3

assert len(masks["predicted"].shape) == 3

show_prediction(model, img)

Metric explanation

For evaluating instance segmentation results, we would be using a metric called Intersection over Union or IoU.

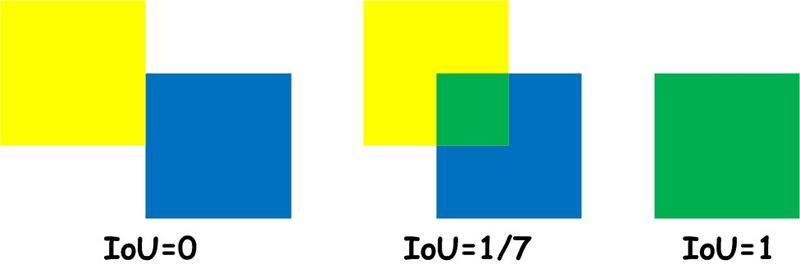

The IoU metric is a method to quantify the percent overlap between the ground-truth (or target) mask and the predicted (output) mask. i. e., the IoU metric measures the number of pixels common between the ground-truth and prediction masks divided by the total number of pixels present across both masks and is mathematically represented as:

$$IoU = \frac{{target \cap prediction}}{{target \cup prediction}}$$

As a visual example, let's suppose we're tasked with calculating the IoU score of a prediction mask (colored yellow), given the ground truth labeled mask (colored blue). The intersection (A∩B) is comprised of the pixels found in both the prediction mask and the ground truth mask (denoted in green color), whereas the union (A∪B) is simply comprised of all pixels found in either the prediction or target mask.

Image credits: link

Image credits: link

As can be seen from the above example, more the intersection or overlap between the ground truth and the predicted mask, greater is the IoU metric value. The maximum IoU metric value of 1 is obtained when both the predicted mask and ground-truth mask overlap perfectly and the minimum value of 0 is obtained when there is absolutely no overlap.

The above explanation is for a pair of single ground-truth or true mask and single predicted mask. Intersection over union metrics (IOU) for a pair of true and predicted masks can be calculated as follows:

img, masks = get_true_and_predicted_masks(model, data_loader_test.dataset[0])

# calculate the metrics

iou_metric_mask_pair(

binary_segmentation=masks["predicted"][0, :, :],

binary_gt_label=masks["true"][0, :, :],

)

In the instance segmentation task, a single image might predict multiple instance segmentation masks and the output prediction masks might not necessarily be in the same order as the ground-truth masks, i.e., the ordering of true and predicted masks can be and usually is different. In the example bellow, we have three true or ground truth masks but four predicted masks with score larger than 0.5:

metrics = iou_metric_matrix_of_example(model, data_loader_test.dataset[0], 0.5)

cm = sns.light_palette("lightblue", as_cmap=True)

df = pd.DataFrame(metrics)

df.style.background_gradient(cmap=cm)

For a single input image, which contains multiple prediction masks and ground-truth masks (since there can be more than one dophin in the image), we first calculate the IOU metric for all the predicted and gound-truth pairs .

In the example above, we have three dolphins with three true masks, while the model predicted four masks. This is why the matrix above has four rows (corresponding to predictions) and three columns (corresponding to ground truth). The first mask predicted by the model is represented by the first row (row 0). As we can see, the best fitting is with the third true mask (column 2). The second predicted mask is represented with the second row (row 1) and the best fit is with the first true mask (column 1) and so on. The last row is an extra prediction.

Thus for a single input image, we calculate the IOU metric in such a way that the total IOU score for the image is maximized. That is, in the above example the IOU metric for the first predicted mask is taken as 0.691147 and 0.586046, 0.514863, 0.000 for the second, third and fourth respectively and take the mean of the four IOU metric values to obtain the IOU metric for single example image. The last one is an extra incorrect prediction and hence it is assigned the value of 0.000.

We repeat the above for all the images in the dataset and take the mean of the IOU values to obtain the IOU metric value for the entire dataset

largest_values_in_row_colums(metrics)

Finally, we can get IOU metrics for the whole image:

metric = iou_metric_example(model, data_loader_test.dataset[4], 0.5)

print(f"Average IOU metric on given example is {metric:.3f}")

iou, iou_df = iou_metric(model, data_loader_test.dataset)

iou_df.sort_values(by="iou").style.background_gradient(cmap=cm)

show_predictions_sorted_by_iou(model, data_loader_test.dataset)